Understanding Normalization and Denormalization in Databases

Database design plays a crucial role in building efficient and maintainable systems.

In this process, Normalization and Denormalization are key concepts essential for optimizing data consistency and performance.

This article will explore the concepts, purposes, pros and cons, and real-world applications of data normalization and denormalization in detail and systematically.

What is Data Normalization?

Normalization is the process of structuring data in a relational database to minimize data redundancy and maintain data integrity.

By logically separating database tables and defining relationships between them, normalization enables efficient data management and retrieval.

Purpose of Normalization

- Minimize Data Redundancy: Prevents the same data from being stored in multiple places, saving storage space and maintaining data consistency.

- Ensure Data Integrity: Maintains consistency and accuracy of data to prevent errors.

- Flexible Data Structure: Makes it easy to change or expand the database structure.

- Efficient Data Management: Reduces anomalies that can occur during data insertion, modification, or deletion.

Steps of Normalization (Normal Forms)

Normalization involves multiple steps, each following specific rules. Let’s examine each normal form in detail through examples.

First Normal Form (1NF)

First Normal Form (1NF) states that all fields must contain atomic values, meaning each column should contain a single value and should not include repeating groups or arrays.

1NF Conditions:

- All fields must have atomic values; that is, each cell should contain only a single value.

Example

Table violating 1NF (contains group data):

| StudentID | StudentName | EnrolledCourses |

|---|---|---|

| 1 | Hong Gil-dong | Math, Science |

| 2 | Kim Young-hee | English, History, Math |

| 3 | Lee Chul-soo | Science |

- Problem: The

EnrolledCoursescolumn contains multiple courses in a single cell. This is group data, which violates the 1NF rule. Each cell should contain only one value.

Table transformed into 1NF:

| StudentID | StudentName | EnrolledCourse |

|---|---|---|

| 1 | Hong Gil-dong | Math |

| 1 | Hong Gil-dong | Science |

| 2 | Kim Young-hee | English |

| 2 | Kim Young-hee | History |

| 2 | Kim Young-hee | Math |

| 3 | Lee Chul-soo | Science |

- After transformation: The

EnrolledCoursecolumn now has only one course per record for each student. Since each cell contains only one value, it satisfies 1NF.

Second Normal Form (2NF)

Second Normal Form (2NF) requires that the table first satisfy 1NF and eliminates partial dependencies so that all non-key attributes are fully functionally dependent on the entire primary key. In cases where composite keys are used, non-key attributes should not depend on only part of the primary key.

2NF Conditions:

- Must satisfy 1NF.

- There should be no attributes (columns) that are dependent on part of the primary key.

Example

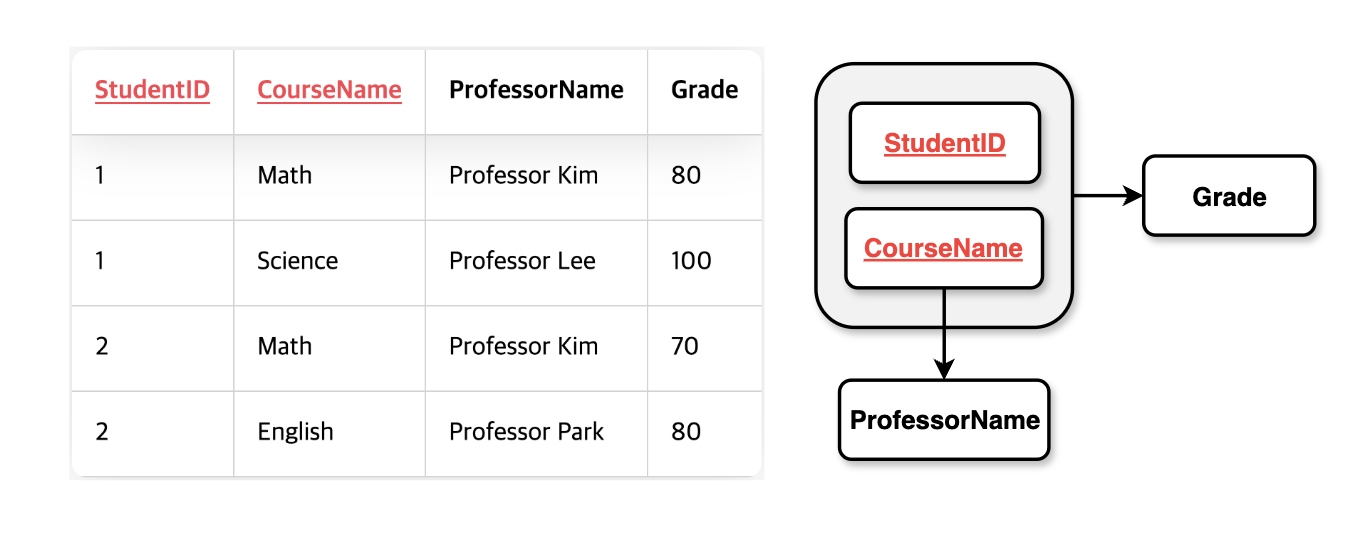

Table violating 2NF (contains partial dependency):

- Primary Key:

StudentID + CourseName(Two columns combined as primary key) - Problem:

Gradedepends onStudentID + CourseName, butProfessorNamedepends only onCourseName. This means there’s a partial dependency on part of the primary key, violating 2NF.

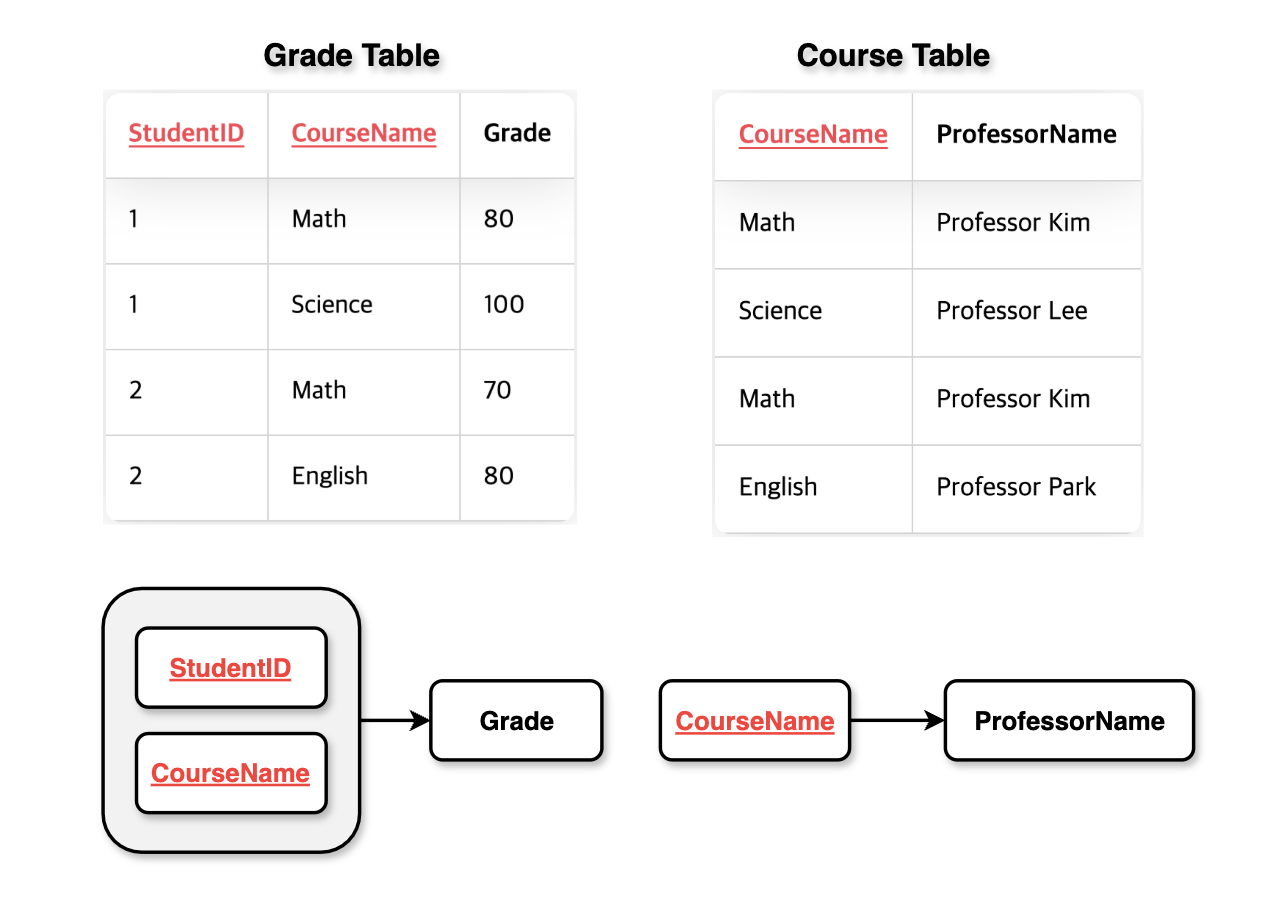

Table transformed into 2NF:

- After transformation: The grade information and course information are separated into different tables, removing the partial dependency.

Third Normal Form (3NF)

Third Normal Form (3NF) requires that the table first satisfy 2NF and eliminates transitive dependencies so that non-key attributes do not depend on other non-key attributes.

3NF Conditions:

- Must satisfy 2NF.

- Non-key attributes should not depend on other non-key attributes.

Example

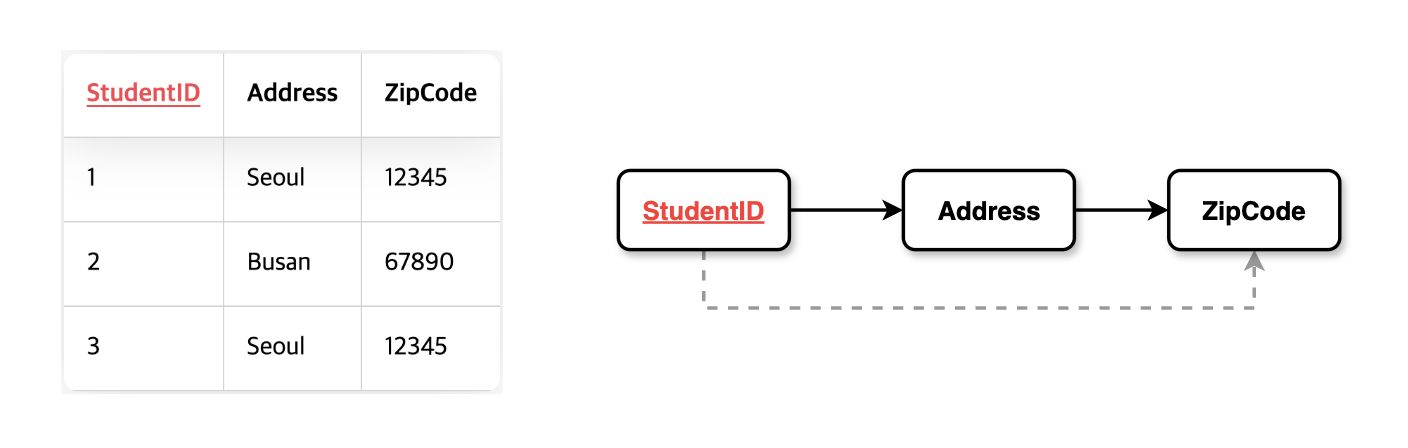

Table violating 3NF (contains transitive dependency):

- Primary Key:

StudentID - Problem:

Addressdepends onStudentID, andZipCodedepends onAddress, a non-key attribute. This creates a transitive dependency whereZipCodeindirectly depends onStudentIDthroughAddress.

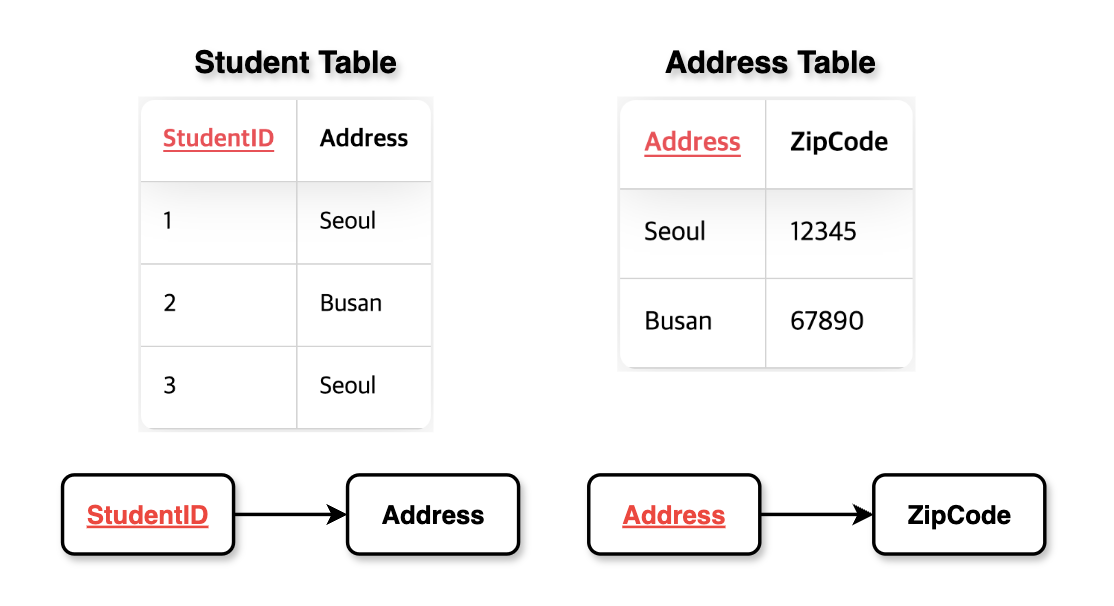

Table transformed into 3NF:

- After transformation:

ZipCodeis separated into an Address table, removing the transitive dependency.

Boyce-Codd Normal Form (BCNF)

Boyce-Codd Normal Form (BCNF) is a stronger version of 3NF, where every determinant must be a candidate key. This addresses anomalies that can occur in certain situations not covered by 3NF.

BCNF Conditions:

- Must satisfy 3NF.

- Every determinant must be a candidate key.

Example

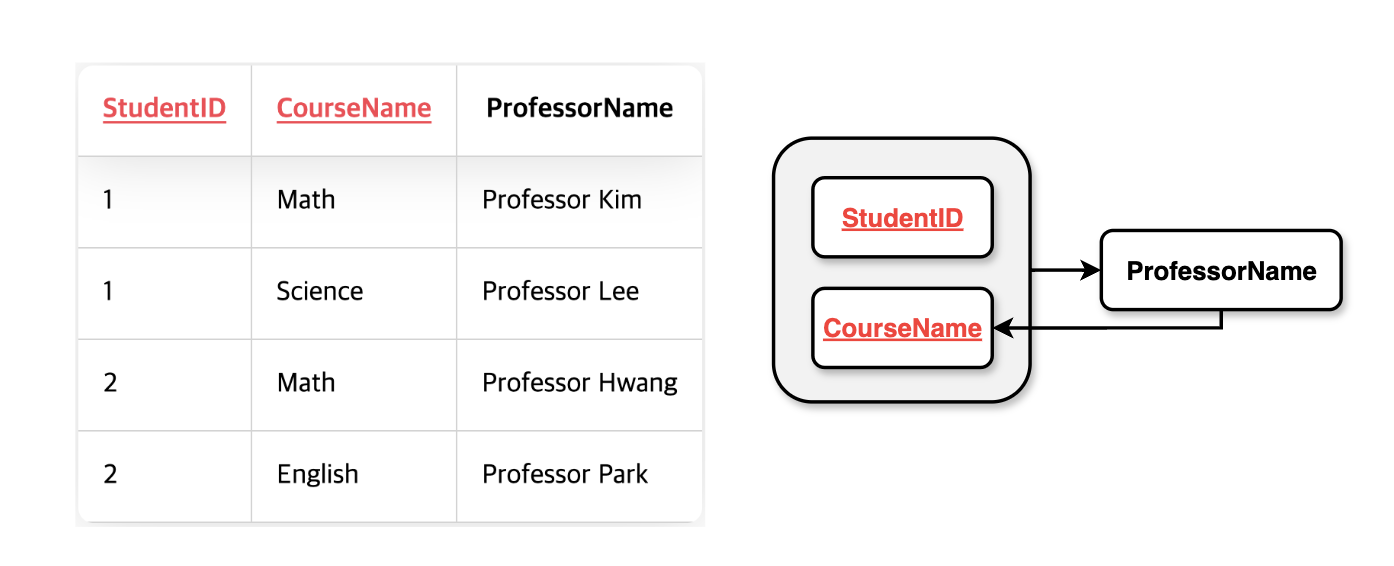

Table violating BCNF (determinant is not a candidate key):

- Primary Key:

StudentID + CourseName - Problem:

ProfessorNameis a determinant forCourseName, but it is not a candidate key. In other words, a situation arises where the determinant is not a candidate key, resulting in a violation of BCNF (Boyce-Codd Normal Form).

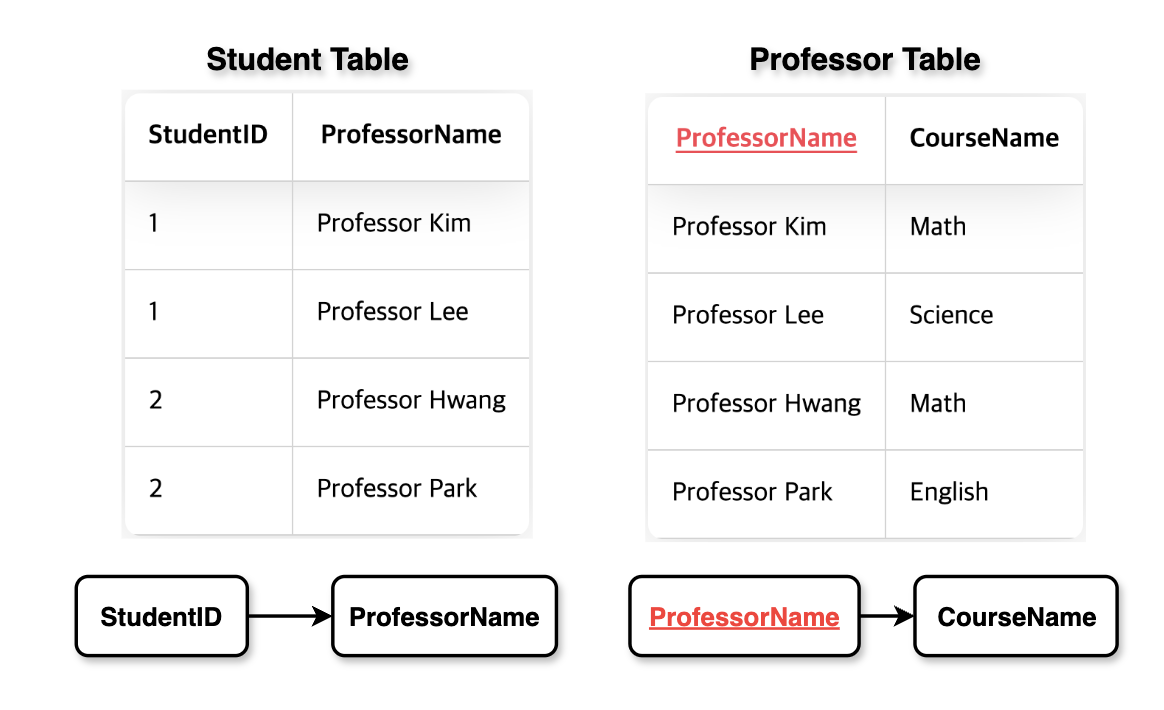

Table transformed into BCNF:

- After transformation:

ProfessorNameandCourseNameare separated into different tables, satisfying BCNF.

Advantages and Disadvantages of Normalization

Advantages:

- Reduced Data Redundancy: Saves storage space and maintains data consistency by minimizing duplicate data.

- Improved Data Integrity: Reduces anomalies during insert, delete, and update operations.

- Flexible Structure: Facilitates easy expansion and maintenance of the database.

Disadvantages:

- Increased Joins: May lead to performance degradation due to the need for joins across multiple tables.

- Complex Queries: Accessing data may require complex queries.

What is Denormalization?

Denormalization is the process of intentionally introducing redundancy into a database for performance optimization, such as combining tables or allowing duplicate data. It is primarily used to optimize read performance and reduce complex joins.

Purpose of Denormalization

- Performance Improvement: Increases query speed by consolidating frequently accessed data into a single table, reducing the need for joins.

- Simplified Queries: Simplifies query writing and maintenance by reducing the need for complex joins.

- Read Optimization: Maximizes read performance in environments where data retrieval is frequent.

Types of Denormalization

- Table Merging: Combines related tables into one, allowing data duplication.

- Adding Duplicate Columns: Stores frequently used data in multiple tables.

- Caching: Stores calculated results or aggregated data separately for quick access during queries.

- Adding Indexes: Improves performance by adding indexes to increase search speed.

Advantages and Disadvantages of Denormalization

Advantages:

- Improved Read Performance: Reduces join operations, enhancing data retrieval speed.

- Simplified Queries: Allows fetching necessary data from a single table, simplifying query writing.

- Faster Response Time: Particularly advantageous in OLAP (Online Analytical Processing) environments.

Disadvantages:

- Increased Data Redundancy: Increases storage requirements and complicates maintaining data consistency.

- Complex Data Management: Requires managing all duplicate data during insert, update, and delete operations.

- Integrity Issues: Maintaining integrity constraints becomes challenging due to duplicate data.

Comparison of Normalization and Denormalization

| Feature | Normalization | Denormalization |

|---|---|---|

| Purpose | Minimize data redundancy, maintain integrity | Performance improvement, read optimization |

| Data Redundancy | Minimized | Intentionally allowed |

| Query Complexity | Requires joins, complex queries possible | Reduced joins, simpler queries |

| Data Integrity | High | May decrease |

| Storage Space | Efficient | Increased |

| Maintenance | Easier | May become complex |

| Use Cases | OLTP (Online Transaction Processing) systems | OLAP, Data Warehouse systems |

Practical Applications

1. E-commerce Websites

Applying Normalization:

- Separate tables for customers, orders, and products to maintain data integrity.

- Example: By separating customer and order tables, changes in customer information can be consistently applied across all order records.

Applying Denormalization:

- Duplicate customer names or addresses in the order table to speed up frequently accessed order information.

- Example: Include customer information in the order table to quickly display customer names on the order list page.

2. Social Media Platforms

Applying Normalization:

- Manage data such as users, posts, and comments in separate tables.

- Example: By separating user and post tables, any changes in user information are automatically reflected in posts.

Applying Denormalization:

- Store like counts or comment counts redundantly in posts to speed up viewing popular posts.

- Example: Save the number of likes in the post table to quickly retrieve popular posts in real-time.

Optimization of Normalization and Denormalization

When designing databases, normalization and denormalization are not mutually exclusive concepts; it’s important to appropriately combine them based on the situation. Here are some strategies for optimization:

- Start with Normalization in Initial Design: Design with a normalized structure first to maintain data integrity and consistency.

- Apply Denormalization After Performance Analysis: Optimize parts where performance bottlenecks are found by applying denormalization.

- Utilize Indexes: Even in a normalized database, performance can be improved by utilizing appropriate indexes.

- Introduce Caching Strategies: Cache frequently accessed data to reduce database access.

- Continuous Monitoring and Optimization: Continuously monitor database performance and adjust the structure as needed.

Conclusion

Data normalization and denormalization play significant roles in database design.

Normalization minimizes data redundancy and maintains integrity, enabling efficient data management, but can sometimes lead to performance degradation.

On the other hand, denormalization allows redundancy for performance gains but may face challenges in maintaining data integrity.